| Article ID | Journal | Published Year | Pages | File Type |

|---|---|---|---|---|

| 710470 | IFAC-PapersOnLine | 2016 | 6 Pages |

Abstract

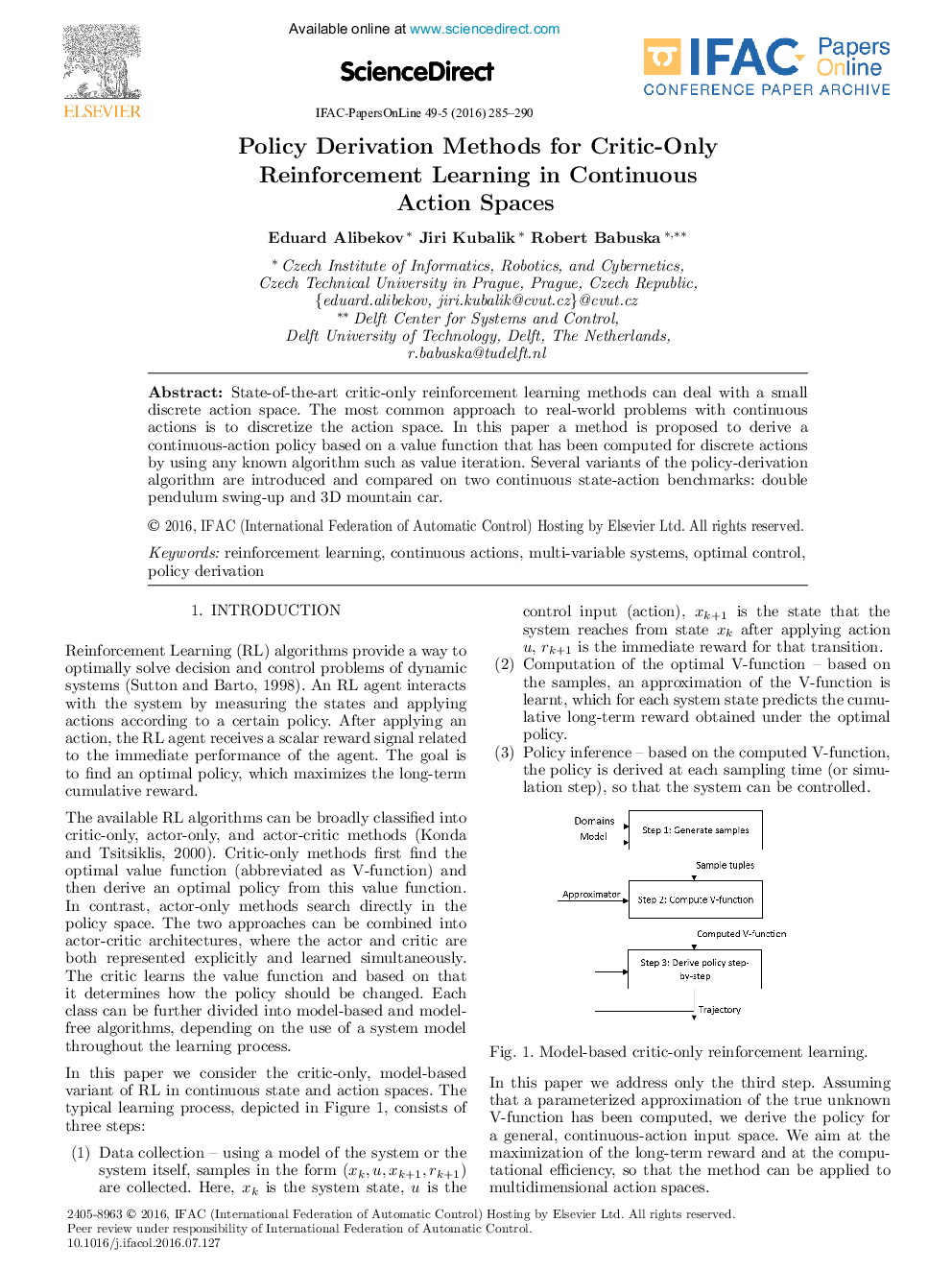

State-of-the-art critic-only reinforcement learning methods can deal with a small discrete action space. The most common approach to real-world problems with continuous actions is to discretize the action space. In this paper a method is proposed to derive a continuous-action policy based on a value function that has been computed for discrete actions by using any known algorithm such as value iteration. Several variants of the policy-derivation algorithm are introduced and compared on two continuous state-action benchmarks: double pendulum swing-up and 3D mountain car.

Related Topics

Physical Sciences and Engineering

Engineering

Computational Mechanics

Authors

Eduard Alibekov, Jiri Kubalik, Robert Babuska,